R

In R, extending the previous example is almost trivially easy. The coef() function, applied to a glm summary object, returns an array with the parameter estimate, standard error, test statistic, and p-value. In one statement, we can extract the p-value for the interaction and return an indicator of a rejected null hypothesis. (This line is commented on below.) Then the routine is wrapped as a trivial function.

logist_inter = function() {

c = rep(0:1,each=50) # sample size is 100

x = rnorm(100)

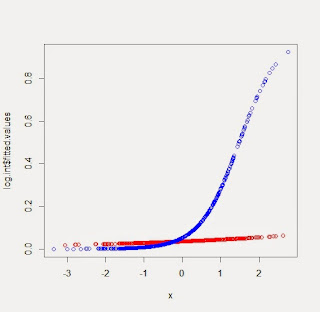

lp = -3 + 2*c*x

link_lp = exp(lp)/(1 + exp(lp))

y = (runif(100) < link_lp)

log.int = glm(y~as.factor(c)*x, family=binomial)

reject = ifelse( coef(summary(log.int))[4,4] < .05, 1, 0)

# The coef() function above gets the parameter estimates; the [4,4]

# element is the p-value for the interaction.

return(reject)

}

Running the function many times is also trivial, using the replicate() function.

pow1 = replicate(100, logist_inter())The result is an array of 1s and 0s. To get the estimated power and confidence limits, we use the binom.test() function.

binom.test(sum(pow1), 100)The test gives a p-value against the null hypothesis that the probability of rejection is 0.5, which is not interesting. The interesting part is at the end.

95 percent confidence interval:

0.3219855 0.5228808

sample estimates:

probability of success

0.42

It would be simple to adjust this code to allow a change in the number of subjects or of the effect sizes, etc.

SAS

In SAS, generating the data is no trouble, but evaluating the power programmatically requires several relatively cumbersome steps. To generate multiple data sets, we include the data generation loop from the previous entry within another loop. (Note that the number of observations has also been reduced vs. the previous entry.)

data test;

do ds = 1 to 100; #100 data sets

do i = 1 to 100; #100 obs/data set

c = (i gt 50);

x = normal(0);

lp = -3 + 2*c*x;

link_lp = exp(lp)/(1 + exp(lp));

y = (uniform(0) lt link_lp);

output;

end;

end;

run;

Then we fit all of the models at once, using the by statement. Here, the ODS system suppresses voluminous output and is also used to capture the needed results in a single data set. The name of the piece of output that holds the parameter estimates (parameterestimates) can be found with the ods trace on statement.

ods select none; ods output parameterestimates= int_ests; proc logistic data = test ; by ds; class c (param = ref desc); model y(event='1') = x|c; run; ods exclude none;

The univariate procedure can be used to count the number of times the null hypothesis of no interaction would be rejected. To do this, we use the loccount option to request a table of location counts, and the mu0 option to specify that the location of interest is 0.05. As above, since our goal is to use the count programmatically, we also extract the result into a data set. If you're following along at home, it's probably worth your while to print out some of this data to see what it looks like.

ods output locationcounts=int_power; proc univariate data = int_ests loccount mu0=.05; where variable = "x*c"; var probchisq; run;For example, while the locationcounts data set reports the number of observations above and below 0.05, it also reports the number not equal to 0.05. This is not so useful, and we need to exclude this row from the next step. We do that with a where statement. Then proc freq gives us the proportion and (95%) confidence limits we need, using the binomial option to get the confidence limits and the weight statement to convey the fact that the count variable represents the number of observations.

proc freq data = int_power; where count ne "Num Obs ^= Mu0"; tables count / binomial; weight value; run;Finally, we find our results:

Binomial Proportion

Count = Num Obs < Mu0

Proportion 0.4000

ASE 0.0490

95% Lower Conf Limit 0.3040

95% Upper Conf Limit 0.4960

Exact Conf Limits

95% Lower Conf Limit 0.3033

95% Upper Conf Limit 0.5028

We estimate our power at only 40%, with a confidence limit of (30%, 50%). This agrees closely enough with R: we don't need to narrow the limit to know that we'll need a larger sample size.

An unrelated note about aggregators: We love aggregators! Aggregators collect blogs that have similar coverage for the convenience of readers, and for blog authors they offer a way to reach new audiences. SAS and R is aggregated by R-bloggers, PROC-X, and statsblogs with our permission, and by at least 2 other aggregating services which have never contacted us. If you read this on an aggregator that does not credit the blogs it incorporates, please come visit us at SAS and R. We answer comments there and offer direct subscriptions if you like our content. In addition, no one is allowed to profit by this work under our license; if you see advertisements on this page, the aggregator is violating the terms by which we publish our work.